Linux is equipped with KVM, which is another hypervisor at the same level of VMWare and VirtualBox. However, it has the great capability for GPU pass through, which grant the guest system to access GPU natively.

The function is great, but the setup is a bit complicated because it involves low level configuration of Linux to mask the GPU away from kernel, which allow the guest to use it exclusively.

To do so, we need to do the following steps.

1. Install the KVM as followed

sudo apt-get install qemu-kvm libvirt-bin virtinst bridge-utils cpu-checker virt-manager ovmf

2. Rebuild the initramfs for the kernel, so that it can load before proper Radeon or AMDGPU load.

jimmy@jimmy-home:~$ cat /etc/modules # /etc/modules: kernel modules to load at boot time. # # This file contains the names of kernel modules that should be loaded # at boot time, one per line. Lines beginning with "#" are ignored. pci_stub vfio vfio_iommu_type1 vfio_pci vfio_virqfd jimmy@jimmy-home:~$ jimmy@jimmy-home:~$ cat /etc/initramfs-tools/modules # List of modules that you want to include in your initramfs. # They will be loaded at boot time in the order below. # # Syntax: module_name [args ...] # # You must run update-initramfs(8) to effect this change. # # Examples: # # raid1 # sd_mod pci_stub ids=1002:683d,1002:aab0 vfio vfio_iommu_type1 vfio_pci vfio_virqfd jimmy@jimmy-home:~$ jimmy@jimmy-home:~$ sudo update-initramfs -u

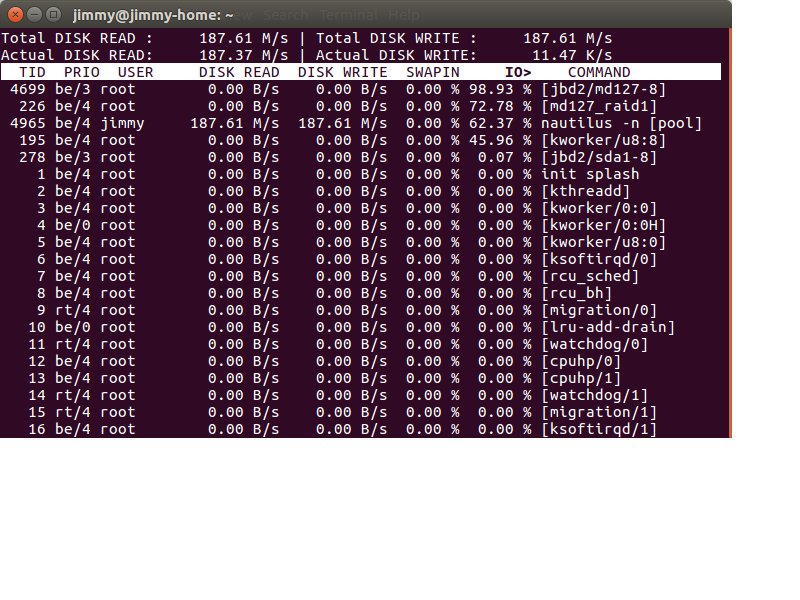

3. Config the kernel to load vfio-pci before loading any GPU driver, also blacklist the GPU hardware ID which look up in “lspci -nnk”. Furthermore, you can verify the status with “lspci -nnk” make sure the driver for GPU is vfio-pci rather than radeon

jimmy@jimmy-home:~$ vi /etc/modprobe.d/vfio.conf softdep radeon pre: vfio-pci softdep amdgpu pre: vfio-pci options vfio-pci ids=1002:683d,1002:aab0 disable_vga=1

01:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Cape Verde XT [Radeon HD 7770/8760 / R7 250X] [1002:683d] Subsystem: PC Partner Limited / Sapphire Technology Cape Verde XT [Radeon HD 7770/8760 / R7 250X] [174b:e244] Kernel driver in use: vfio-pci Kernel modules: radeon, amdgpu 01:00.1 Audio device [0403]: Advanced Micro Devices, Inc. [AMD/ATI] Cape Verde/Pitcairn HDMI Audio [Radeon HD 7700/7800 Series] [1002:aab0] Subsystem: PC Partner Limited / Sapphire Technology Cape Verde/Pitcairn HDMI Audio [Radeon HD 7700/7800 Series] [174b:aab0] Kernel driver in use: vfio-pci Kernel modules: snd_hda_intel

4. It is not a good practice to run libvirtd with root, but it is a quick way to let libvirtd to access an attachable storage.

Changing /etc/libvirt/qemu.conf to make things work. Uncomment user/group to work as root. Then restart libvirtd

The following are the reference I checked

https://bbs.archlinux.org/viewtopic.php?id=162768

https://wiki.archlinux.org/index.php/PCI_passthrough_via_OVMF#Using_vfio-pci

https://pve.proxmox.com/wiki/Pci_passthrough

https://ycnrg.org/vga-passthrough-with-ovmf-vfio/